God particle of Big Data universe discovered: a smart sensor without ICE

- 作者: Jean-Paul Smets

- 2014年06月04日

- Big Data

In computer science, part of future can be predicted through a simple axiom:”what happens at CERN soon happens everywhere.” We could add a second axiom:””what starts centralized ends up decentralized.” How does this apply to Big Data ?

BIG DATA IS USUALLY SMALL

Many so-calld “Big Data” problems are not that big. The size of 5 years of transactions of a central bank is about 100 GB. One year of central bank transactions can thus fit into a smart-phone. The size of all transactions of an insurance company for a single country is less than 4 TB. Insurance data can fit into a single hard disk.

Many problems of data analysis for which companies are investing expensive infrastructure marked with a fashionable “Big Data” label could actually be solved with a laptop computer – or a even smart-phone – and open source software. Open Source software such as Scikit-Learn [1], Pandas [2] or NLTK [3] are used by researchers and financial institutions worldwide to process transactional data and customer relation data. Traditional database such as MariaDB [4] can handle nowadays up to 1 million inserts per second. MariaDB 10.0 [5] even includes replication technologies created by Taobao developers that can obviously scale.

My recommendation before going into expensive investments: first purchase a small GNU/Linux server with at least 32 GB memory, a large SSD disk (ex. 1 TB) and study Scikit-Learn machine learning 102 [30] (based on the lecture of Andrew Ng who recently moved to Baidu [31]). In most cases, it will be enough to solve your problems. If not, you will be able to design a prototype that can later scale on a bigger infrastructure. Scikit-Learn is by the way the toolkit used by many engineers at Google to prototype solutions for their “Big Data” problems.

SMALLEST PARTICLES PRODUCE BIGGEST DATA

Extreme challenges posed by research on nuclear physics and small particles recurrently lead to the creation of new information technologies. HTML was invented by Tim Berners Lee in 1991 at the European Center for Nuclear Research – known as CERN – to solve the problem of large scale document management. The Large Hadron Collider (LHC) of CERN has been designed to process 1 petabyte of data per second. It provided in 2013 a first proof of existence of the Higgs boson [6], a problem that had remained unsolved for nearly 50 years.

Let us understand what 1 petabyte of data per second means. 1 petabyte is the same as 1,000 terabytes, 1,000,000 gigabytes or 13.3 years of HD video. Being able to process 1 petabyte of data per second is thus equivalent to being able to process the data generated by 419,428,800 (13.3 * 365 * 24 * 3600) HD video cameras. This is 15 times more than the number of CCTV cameras in China [7] and 100 times more than in the United Kingdom [8].

Overall, technologies created at CERN for small particles could be applied to collect and process in real time all data produced by every human being on the planet in the form of sound, video, health monitoring, smart fabric logs, etc.

INTRODUCING SMART SENSORS

The key concept that explains the success of CERN Big Data architecture is its ability to throw away as soon as possible most of the data that is collected and eventually store only a tiny fraction of it [9]. This is achieved by moving most of data processing to smart sensors that are capable to achieve so-called “artificial intelligence”, in reality advanced statistics also known as machine learning.

One of the sensors at LHC – called the Compact Muon Solenoid (CMS) [10] – collects 3 terabytes of picture data per second that represent small particles colliding. It then throws away pictures that are considered as irrelevant and sends “only” 100 megabit per second to the LHC storage infrastructure, that is 30,000 times less than what it initially collected. The sensor itself uses an FPGA, a kind of programmable hardware that can process data faster than most processors, to implement a machine learning algorithm called “clustering”[11].

If we wanted to apply to CCTV surveillance the ideas of the LHC, we would possibly store in each video camera a couple of hours of video and use an FPGA or a GPU to process video data in real time, directly inside the camera. We would use re-programmable artificial intelligence to detect the number of people, their gender, their size, their behavior (peaceful, violent, thief, lost, working, etc.), the presence of objects (ex. a suitcase) or the absence of an object (ex. a public light). Only this metadata would be sent over the network to a central processing facility that could decide, if necessary, to download relevant pictures or portions of video. And in case a CCTV area gets disconnected by criminals, a consumer drone [12] could be sent to check out what happens.

Overall, the LHC teaches us how to build quickly an efficient video surveillance system with a smaller investment or a wider reach. This system can be deployed on existing narrow band telecommunication networks – including GSM – anywhere in the world. It is also probably more resilient than systems that store and process everything in a central place. And it can still work off-line or in case of network outage.

SMART PRIVACY, SMART MARKETS

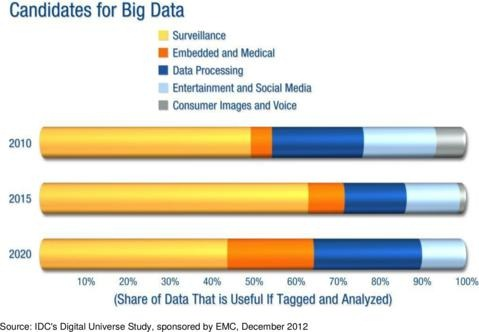

“With the tapping program code-named PRISM, the U.S. government has infringed on the privacy rights of people both at home and abroad” explains the report on the U.S. human rights situation published by Chinese government on 28th Feburary 2014 [13]. Similar programs have been implemented in countries abid strict privacy Law [27]. With 65% market share dedicated to surveillance and strong economic forces behind, Big Data is one of the technologies that can infringe the most on the privacy rights if it is not regulated.

Candidate Markets for Big Data [14]

Smart sensors provide a possible solution, as long their code can be audited by independent authority in charge of privacy. By dropping, encrypting, and anonymising most data, privacy Law can be enforced at the origin, inside the sensor. The risk of abuse of the surveillance system is reduced by the absence of raw data transmission and by the absence of central storage. Sensor access logs can be published as open data to ensure complete auditability.

Upgrading CCTV to smart cameras in China alone represents a yearly market of up to 200 billion RMB. A national upgrade program could be an occasion to build core features of a “smart city” directly inside smart cameras: public Internet access, Web acceleration, Cloudlet, mobile storage offload, geographic positioning, multi-access mesh networking, barrier-free tolling systems, etc. Those are only a few of the many applications that could be developed and later exported worldwide, since China is the largest producer of CCTV systems and already a partner of foreign defense industry [15].

INTERNET OF THINGS MEANS EVEN BIGGER DATA

By 2020, surveillance will no longer be the primary market of big data. According to Gartner, 26 billion objects will then be connected to Internet [16], more than 100 times more than the number of CCTV cameras worldwide. Connected objects include industrial sensors in factories, cars, consumer electronics, wind turbines, traffic lights, etc.

Preventive maintenance through failure prediction – a direct application of machine learning and big data – will then be embedded into objects as well as other smart features. GPUs in low cost system on chips (SOC) will be used to implement fast machine learning at low cost [17].

Chinese industry already has an edge for applications that combine Internet of Things with Big Data. Recent alliance of ARM, Spreadtrum, Allwinner, Rockchip, Huawei and others [18] highlights the growing importance of ARM based solutions designed in China. It is now possible to imagine that in a few years, a System on Chip with GPU, networking and Linux operating system costs less than 1 USD. At this price, it would become the standard component to implement machine learning algorithms for connected smart device. On the higher end, a Big Data cluster could be designed with multi-core ARM System on Chips (SOC) and solid state disks (SSD). And for the first time, all components could be sourced in China at lower cost than Intel for equivalent performance.

Mobile Computing Association (MCA) Established in Shenzhen in April 2014 (Credit. Bob Peng, ARM) [18]

What is probably missing now is software to process data with efficient distributed algorithms. Considering the recent “No ICE Policy [21]” (a.k.a. “No IOE”) that has been discussed in China and the strong dependency of HADOOP to Java, a product now controlled by Oracle, it could be a good time to consider other software solutions for Big Data. Recently, many communities seem to unify their data processing efforts on python’s Numpy open source technology [20,21] while others create new languages such as Julia [22]. One of the biggest challenges to solve is “out-of-core” data processing, that is processing data beyond the limits of available memory. Projects such asWendelin [23] and Blaze [24] are already on track to provide open source solutions.

Overall, our guess is that “No ICE” solutions will be created in one of the Big Data projects that are launched in China – in Guizhou [25] or in Xinjiang [26] for example – backed by budgets of tens of billions of RMB that open the doors to truly innovative technologies able to cope with exabytes if not zetabytes of data produces by smart sensors.

ACKNOWLEDGEMENT

I would like to thank Thomas Serval of Kolibree [28], Maurice Ronai of Items [29], Hervé Rannou of Cityzen Data [30], Mathias Herberts of Cityzen Data [32] and Alexandre Gramfort of Scikit-Learn [1] for the ideas they shared on Big Data.

Reference

[1] Scikit-Learn – http://scikit-learn.org/

[2] Pandas – http://pandas.pydata.org/

[3] NLTK – http://www.nltk.org/

[4] ScaleDB – http://www.scaledb.com/

[5] MariaDB 10.0 annuncement and multimaster replication

[6] LHC – http://en.wikipedia.org/wiki/Higgs_boson#Discovery_of_candidate_boson_at_CERN

[7] Big Brother in China is watching, with 30 million surveillance cameras – http://news.msn.com/world/big-brother-in-china-is-watching-with-30-million-surveillance-cameras-1

[8] Revealed: Big Brother Britain has more CCTV cameras than China – http://www.dailymail.co.uk/news/article-1205607/Shock-figures-reveal-Britain-CCTV-camera-14-people–China.html

[9] The Large Hadron Collider Throws Away More Data Than It Stores – http://gizmodo.com/5914548/the-large-hadron-collider-throws-away-more-data-than-it-stores

[10] Compact Muon Solenoid – http://en.wikipedia.org/wiki/Compact_Muon_Solenoid

[11] FPGA Design Analysis of the Clustering Algorithm for the CERN Large Hadron Collider – http://homepages.cae.wisc.edu/~aminf/FCCM09%20-%20FPGA%20Design%20Analysis%20of%20the%20Clustering%20Algorithm%20for%20the%20CERN%20Large%20Hadron%20Collider.pdf

[12] Parrot Bebop Drone : caméra HD et compatibilité avec l’Oculus Rift – http://www.lesnumeriques.com/robot/parrot-bebop-drone-p20562/parrot-bebop-drone-camera-hd-compatibilite-avec-oculus-rift-n34313.html

[13] Commentary: U.S. should “sweep its own doorstep” on human rights – http://news.xinhuanet.com/english/indepth/2014-02/28/c_133150005.htm

[14] Big Data, Bigger Digital Shadows, and Biggest Growth in the Far East – http://idcdocserv.com/1414

[15] Huawei, Thales, Orange set up video surveillance in Abidjan – http://www.telecompaper.com/news/huawei-thales-orange-set-up-video-surveillance-in-abidjan–975487

[16] Gartner predicts the presence of 26 billion devices in the ‘Internet of Things’ by 2020 – https://storageservers.wordpress.com/2014/03/19/gartner-predicts-the-presence-of-26-billion-devices-in-the-internet-of-things-by-2020/

[17] Python on the GPU with Parakeet – http://vimeo.com/79556629

[18] Mobile Computing Association MCA Established in Shenzhen – http://www.marce.com.cn/china-tablets-industry/arm-counterattack-on-intel-mobile-computing-association-mca-established-in-shenzhen.html

[19] The homogenization of scientific computing, or why Python is steadily eating other languages’ lunch – http://www.talyarkoni.org/blog/2013/11/18/the-homogenization-of-scientific-computing-or-why-python-is-steadily-eating-other-languages-lunch/

[20] China’s No ‘ICE’ Policy – http://www.datacenterdynamics.com/blogs/china%E2%80%99s-no-ice%E2%80%99-policy-0

[21] Anaconda – https://store.continuum.io/cshop/anaconda/

[22] Julia – http://julialang.org/

[23] Wendelin Exanalytics – http://www.wendelin.io/

[24] Blaze – http://blaze.pydata.org/

[25] Guizhou aims to become big data hub – http://guizhou.chinadaily.com.cn/2014-03/03/content_17317216.htm

[26] Big data center to be built in Xinjiang – http://www.chinadaily.com.cn/china/2013-12/04/content_17152426.htm

[27] Révélations sur le Big Brother français – http://www.lemonde.fr/societe/article/2013/07/04/revelations-sur-le-big-brother-francais_3441973_3224.html

[28] Kolibree – http://www.kolibree.com

[29] Items International – http://www.cityzendata.com/

[30] Machine Learning 102:Practical Advice – http://www.astroml.org/sklearn_tutorial/practical.html

[31] Machine learning godfather Andrew Ng joined the Baidu force deep learning – https://www.ctocio.com/ccnews/15615.html

[32]Cityzen Data – http://www.cityzendata.com

第一时间获取面向IT决策者的独家深度资讯,敬请关注IT经理网微信号:ctociocom

除非注明,本站文章均为原创或编译,未经许可严禁转载。

相关文章: